The Algorithmic Cage: When Justice Becomes Code

Table of Contents

- The Algorithmic Cage: When Justice Becomes Code

- Algorithmic Detention: An Expert’s Take on Justice & Code – Time.news

Imagine a world where your freedom isn’t decided by a judge, but by an algorithm. A world where data points determine your fate, and human biases are amplified through lines of code. Is this the future of justice, or a dystopian nightmare unfolding before our eyes?

The Rise of Algorithmic Detention

The concept of algorithmic detention, where algorithms are used to assess risk and make decisions about pretrial detention or immigration detention, is rapidly gaining traction.While proponents argue that these tools can improve efficiency and reduce bias,critics warn of the potential for discrimination,lack of clarity,and violations of human rights [[3]].

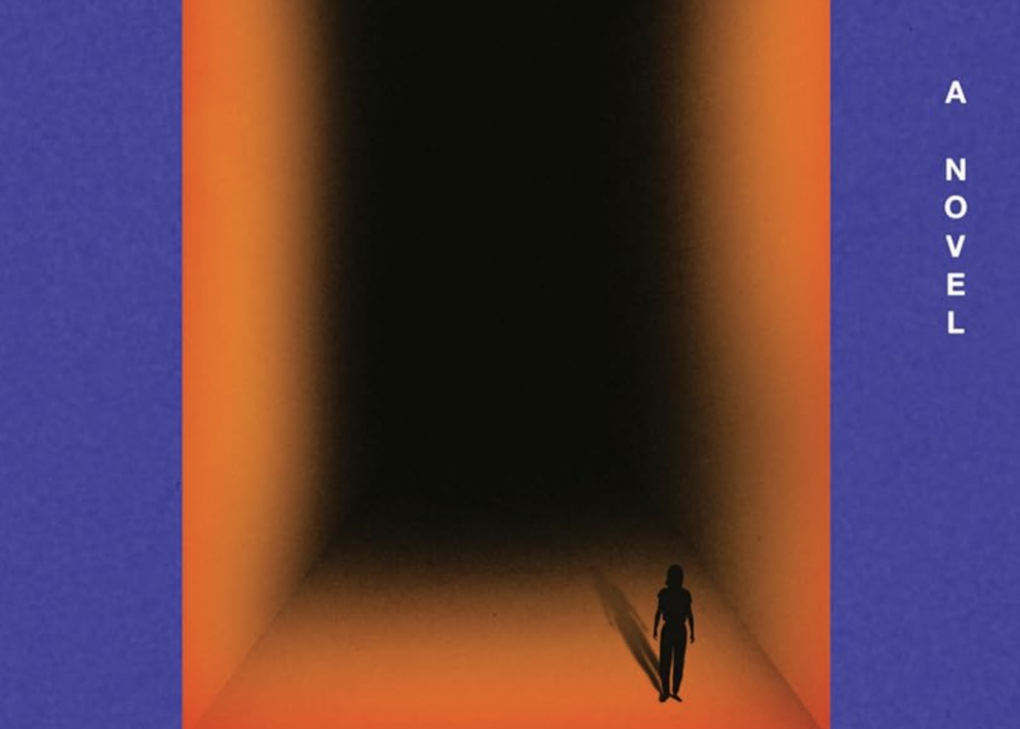

The novel excerpt paints a stark picture of this reality,mirroring the experiences of individuals caught in the web of private immigration detention centers,facing indefinite confinement with no clear legal recourse. The protagonist, Sara, embodies the frustration and disorientation of those whose lives are reduced to the confines of a cell, their fate dictated by unseen forces.

The Allure and the Peril: Why Algorithms?

Why are governments and law enforcement agencies turning to algorithms for detention decisions? The promise of objectivity and efficiency is a powerful draw. Algorithms, it is indeed argued, can process vast amounts of data and identify patterns that humans might miss, leading to more accurate risk assessments and better resource allocation [[2]].

However, this allure masks a darker side. Algorithms are only as good as the data they are trained on. If that data reflects existing societal biases – racial profiling in policing, for example – the algorithm will perpetuate and even amplify those biases. This can lead to discriminatory outcomes, with certain groups being disproportionately targeted for detention.

The Illusion of Objectivity

The belief that algorithms are inherently objective is a hazardous myth. As Cathy O’Neil argues in her book “Weapons of Math destruction,” algorithms are often opaque, unregulated, and prone to reinforcing existing inequalities. The very act of selecting which data points to include in an algorithm involves human judgment, opening the door to bias.

Consider the case of COMPAS (Correctional Offender Management Profiling for Alternative Sanctions), a widely used risk assessment tool in the US criminal justice system. Studies have shown that COMPAS is considerably more likely to falsely flag black defendants as high-risk compared to white defendants, even when controlling for prior criminal history.

The Human Cost: Sara’s Story and Beyond

The novel excerpt highlights the profound psychological impact of algorithmic detention. Sara’s experience of being “incarcerated without due process” resonates with the real-life struggles of countless individuals held in immigration detention centers and pretrial facilities across the country.

The loss of freedom, the uncertainty about the future, and the feeling of being reduced to a set of data points can be devastating. The excerpt poignantly captures Sara’s “harrowing sense of disorientation” as her world shrinks to the confines of a cell. Her struggle to contain her rage, knowing that any expression of dissent will only worsen her situation, is a powerful indictment of a system that dehumanizes individuals.

Immigration Detention in the United States

The US immigration detention system has faced increasing scrutiny in recent years, with concerns raised about the conditions of detention, the lack of due process, and the use of algorithms to determine who is detained and for how long. The “bed mandate,” which required ICE (Immigration and Customs Enforcement) to maintain a certain number of detention beds, further incentivized the detention of migrants [[1]]. Although the bed mandate was in effect from 2009 to 2017, it’s legacy continues to shape the landscape of immigration detention.

the rise of private immigration detention centers, as referenced in the novel excerpt, adds another layer of complexity. These for-profit facilities have a financial incentive to keep beds filled, raising concerns about potential abuses and a lack of accountability.

The International human Rights Implications

Algorithmic detention raises serious concerns under international human rights law. The International Covenant on Civil and Political Rights (ICCPR) guarantees the right to liberty and security of person, and prohibits arbitrary arrest or detention [[3]].

Critics argue that algorithmic detention determinations, particularly in armed conflict settings where data might potentially be limited or unreliable, are inherently arbitrary and violate the ICCPR. The lack of transparency and due process associated with these systems further undermines fundamental human rights principles.

Interpreting “Judge” in the Age of Algorithms

One of the key legal questions surrounding algorithmic detention is whether an algorithm can be considered a “judge” within the meaning of international human rights law. Some argue that algorithms, when properly designed and implemented, can provide a more objective and efficient assessment of risk than human judges. Others contend that algorithms lack the human judgment, empathy, and accountability necessary to make fair and just decisions about detention.

The debate over the role of algorithms in detention is likely to continue for years to come. As technology advances and algorithmic systems become more sophisticated, it will be crucial to develop clear legal and ethical frameworks to ensure that these tools are used in a way that respects human rights and promotes justice.

Pros and Cons of Algorithmic Detention

To fully understand the implications of algorithmic detention,it’s essential to weigh the potential benefits against the potential risks.

Pros:

- increased Efficiency: Algorithms can process large amounts of data quickly, potentially speeding up detention decisions and reducing backlogs.

- Reduced Bias: Proponents argue that algorithms can be designed to be more objective than human decision-makers, reducing the impact of personal biases.

- Improved Accuracy: Algorithms can identify patterns and correlations that humans might miss, potentially leading to more accurate risk assessments.

- Resource Optimization: By identifying high-risk individuals, algorithms can help law enforcement agencies allocate resources more effectively.

Cons:

- Bias Amplification: If the data used to train an algorithm reflects existing societal biases, the algorithm will perpetuate and amplify those biases.

- lack of Transparency: Many algorithmic systems are opaque, making it difficult to understand how they work and why they make certain decisions.

- Due Process concerns: Algorithmic detention can undermine due process rights by denying individuals the opportunity to challenge the basis for their detention.

- Dehumanization: Reducing individuals to a set of data points can lead to a dehumanizing and unjust system of detention.

- Lack of Accountability: It can be difficult to hold algorithms accountable for their decisions, particularly when those decisions have negative consequences.

The Future of Justice: A Call for Caution

As we move further into the age of automation, it’s crucial to approach the use of algorithms in the criminal justice and immigration systems with caution. While these tools hold the potential to improve efficiency and reduce bias, they also pose significant risks to human rights and fundamental freedoms.

We must demand transparency, accountability, and robust oversight to ensure that algorithms are used in a way that promotes justice and protects the rights of all individuals.The story of Sara,trapped in the algorithmic cage,serves as a powerful reminder of the human cost of unchecked technological advancement.

FAQ: Algorithmic Detention

What is algorithmic detention?

Algorithmic detention refers to the use of algorithms to assess risk and make decisions about pretrial detention,immigration detention,or other forms of confinement. These algorithms analyze data points to predict the likelihood of an individual re-offending, failing to appear in court, or posing a threat to public safety.

How are algorithms used in detention decisions?

Algorithms typically analyze data such as criminal history,employment status,address stability,and other factors to generate a risk score. This score is then used by judges, immigration officials, or other decision-makers to determine whether an individual should be detained or released.

What are the potential benefits of algorithmic detention?

potential benefits include increased efficiency, reduced bias (in theory), improved accuracy in risk assessment, and better resource allocation.

What are the potential risks of algorithmic detention?

Potential risks include bias amplification, lack of transparency, due process concerns, dehumanization, and lack of accountability.

Is algorithmic detention legal?

The legality of algorithmic detention is a complex and evolving issue.Critics argue that it can violate due process rights and international human rights law. The legal framework surrounding algorithmic detention is still being developed in many jurisdictions.

How can we ensure that algorithms are used fairly in detention decisions?

Key steps include demanding transparency, ensuring data quality and accuracy, implementing robust oversight mechanisms, providing opportunities for individuals to challenge algorithmic decisions, and addressing potential biases in the design and implementation of algorithms.

Algorithmic Detention: An Expert’s Take on Justice & Code – Time.news

Is algorithmic detention a fair and effective tool for justice, or a perilous step towards a biased, automated future? We sit down with Dr. Aris Thorne, a leading expert in algorithmic bias and ethics, to unpack the complexities of algorithms in detention decisions.

Time.news: Dr.Thorne,thank you for joining us. The concept of algorithmic detention is becoming increasingly prevalent. Can you explain to our readers what it is indeed and why it’s gaining traction?

Dr. thorne: Certainly. Algorithmic detention refers to using algorithms to assess an individual’s risk level and inform decisions about pre-trial detention, immigration detention, and other forms of confinement. The allure lies in the promise of objectivity and efficiency. Proponents argue thes systems can process vast amounts of data and identify patterns humans might miss, leading to more accurate risk assessments and better resource allocation [[2]].

Time.news: The article highlights the story of “Sara,” a fictional character facing indefinite confinement based on algorithmic assessment. How does this reflect the real-world implications of algorithmic bias?

Dr. Thorne: Sara’s story regrettably echoes the experiences of many individuals caught in the web of automated systems. The key is that algorithms are only as good as the data they are trained on. If that data reflects societal biases – like racial profiling, as a notable example – the algorithm will perpetuate and even amplify those biases. The Algorithmic Justice League [[2]][[3]] is doing great work highlighting these issues.

Time.news: Can you provide a concrete example of how this plays out in the criminal justice system?

Dr.Thorne: A prime example is COMPAS (Correctional Offender Management Profiling for Alternative Sanctions). Studies have shown this widely used risk assessment tool is considerably more likely to falsely flag Black defendants as high-risk compared to white defendants, even when controlling for prior criminal history. This demonstrates how algorithmic risk assessment can exacerbate existing inequalities.

Time.news: The article mentions the debate surrounding whether an algorithm can be considered a “judge” under international human rights law. What are your thoughts?

Dr. Thorne: that’s a crucial question. I believe that while algorithms can provide data points, they lack the human judgment, empathy, and accountability necessary to make fair and just decisions about detention. The International Covenant on Civil and Political Rights (ICCPR) guarantees the right to liberty, and it’s arduous to argue that an algorithm, operating in a potentially opaque manner, can adequately uphold those rights [[3]].

Time.news: The “bed mandate” for ICE is mentioned in the context of immigration detention in the united States. How does this policy intersect with the use of algorithms?

Dr. Thorne: The “bed mandate,” which required ICE to maintain a certain number of detention beds, created a financial incentive for detention, further complicating the use of algorithmic risk assessment. While the mandate itself was rescinded, its legacy continues to influence practices. This environment may incentivize the use of algorithms to justify maintaining high detention numbers, even if they produce biased or inaccurate results [[2]]. first, ensuring data quality and accuracy is essential. Second, implementing robust oversight mechanisms and independent audits can help identify and address biases. Third, providing opportunities for individuals to challenge algorithmic decisions is crucial for due process.Organizations like the Algorithmic Justice league [[3]] are doing critical work in raising awareness and advocating for these changes. we need to foster a broader public conversation about the ethical implications of AI in the justice system.

Time.news: Dr. Thorne, thank you for your insights.

Dr. Thorne: My pleasure.