Even experts—those with an uncanny ability to recognize faces—are now struggling to tell if a face is real or fabricated by artificial intelligence. In fact, these “super recognizers” perform no better than chance when identifying AI-generated faces, a startling revelation about the increasing sophistication of synthetic media.

The Rise of Hyperrealistic Fakes

The proliferation of AI-generated images online has created a new challenge: distinguishing between authentic photographs and those created using complex algorithms called generative adversarial networks. These networks work in two stages—first generating a fake image, then having it scrutinized by a “discriminator” that assesses its realism. Through repeated iterations, the fakes become remarkably convincing.

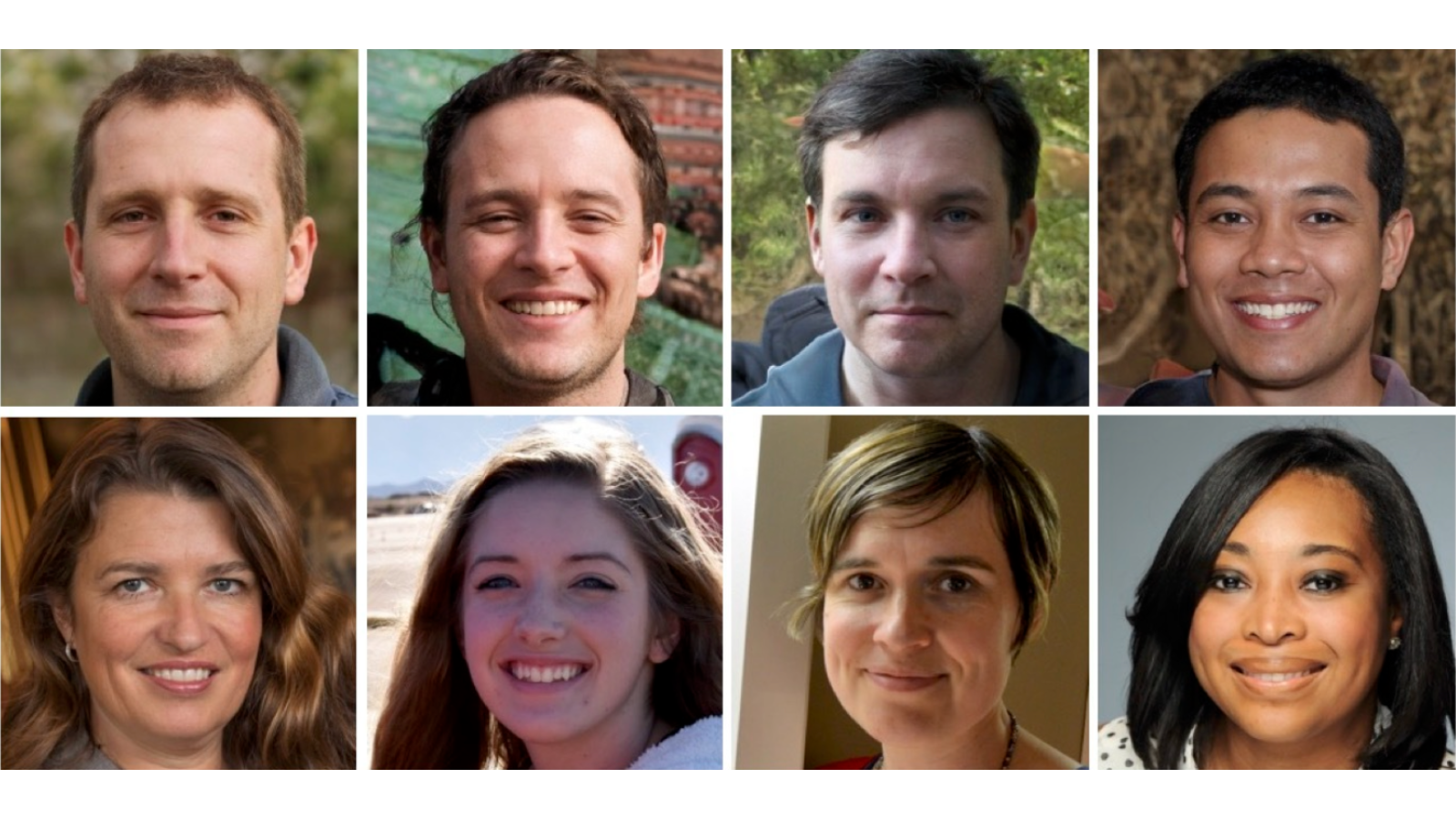

Can you spot a fake face? Researchers have found that people often believe AI-generated faces are more real than actual human faces—a phenomenon known as “hyperrealism.”

A study published Nov. 12 in the journal Royal Society Open Science revealed that typical individuals are even more likely to be fooled, often mistaking AI creations for genuine photographs. However, the research also offered a glimmer of hope: just five minutes of training on common AI rendering errors significantly improved people’s ability to detect the fakes.

- AI-generated faces are becoming indistinguishable from real ones, even for experts.

- People often perceive AI faces as more realistic than actual human faces.

- Brief training on AI rendering flaws can substantially improve fake-face detection.

- Super recognizers, while typically skilled at facial recognition, aren’t immune to being fooled.

“I think it was encouraging that our quite short training procedure increased performance in both groups quite a lot,” said Katie Gray, an associate professor in psychology at the University of Reading in the U.K.

Surprisingly, the training boosted accuracy by similar amounts in both super recognizers and typical recognizers. This suggests that these highly skilled individuals aren’t simply relying on a superior ability to spot rendering errors, but on a different set of cues altogether when identifying fake faces.

Researchers are now exploring ways to leverage the enhanced detection skills of super recognizers. The authors of the study propose a “human-in-the-loop” approach, combining AI detection algorithms with the judgment of trained super recognizers.

The training regimens used in these studies focus on identifying common flaws in AI-generated faces, such as an extra middle tooth, an unnatural hairline, or oddly textured skin. They also highlight that fake faces tend to be more proportionally “perfect” than real ones.

Super recognizers are individuals who excel at facial perception and recognition tasks, often distinguishing between two photographs of unfamiliar people with remarkable accuracy. The Greenwich Face and Voice Recognition Laboratory volunteer database provided the super recognizers for this study; participants had to perform in the top 2% of individuals in facial recognition tasks to qualify.

In initial experiments, super recognizers correctly identified only 41% of AI-generated faces, barely better than random guessing. Typical recognizers fared even worse, correctly identifying about 30% of the fakes. Interestingly, both groups were also prone to misidentifying real faces as fake—39% of the time for super recognizers and around 46% for typical recognizers.

After the five-minute training session, detection accuracy improved significantly. Super recognizers spotted 64% of fake faces, while typical recognizers identified 51%. The rate of misidentifying real faces as fake remained relatively consistent, with super recognizers and typical recognizers incorrectly flagging real faces in 37% and 49% of cases, respectively.

Participants who received the training also took longer to scrutinize the images—about 1.9 seconds longer for typical recognizers and 1.2 seconds longer for super recognizers. Gray emphasized that slowing down and carefully examining facial features is crucial when trying to determine if a face is real or fake.

However, it’s important to note that the training’s effects were measured immediately after the session, and the long-term impact remains unclear. “The training cannot be considered a lasting, effective intervention, since it was not re-tested,” noted Meike Ramon, a professor of applied data science at the Bern University of Applied Sciences in Switzerland.

Ramon also pointed out that the study used different participants for the initial and training phases, making it difficult to determine how much improvement occurs within an individual. A more definitive assessment would require testing the same people before and after training.