The AI Revolution on Your Desktop: How NVIDIA and Microsoft are Transforming the PC Experience

Table of Contents

- The AI Revolution on Your Desktop: How NVIDIA and Microsoft are Transforming the PC Experience

- The AI Revolution is Here: NVIDIA adn Microsoft Redefine the PC Experience – An Expert Interview

Imagine a world where your computer anticipates your needs,assists wiht creative tasks,and learns your preferences in real-time. that future is closer than you think, thanks to the collaboration between NVIDIA and Microsoft, who are pushing the boundaries of generative AI on PCs.

Unlocking AI Power with RTX AI PCs

NVIDIA RTX AI PCs are at the forefront of this transformation,leveraging powerful technology to simplify AI experimentation and maximize performance on Windows 11. This means faster, more efficient AI-driven applications right on your desktop.

What Makes RTX AI PCs Different?

The key is NVIDIA TensorRT, reimagined for RTX AI PCs. This technology combines industry-leading performance with on-device engine building, optimizing AI models in real-time for your specific RTX GPU. Plus, the package size is 8x smaller, making AI deployment seamless for over 100 million RTX AI PCs.

TensorRT for RTX: A Game changer for AI Inference

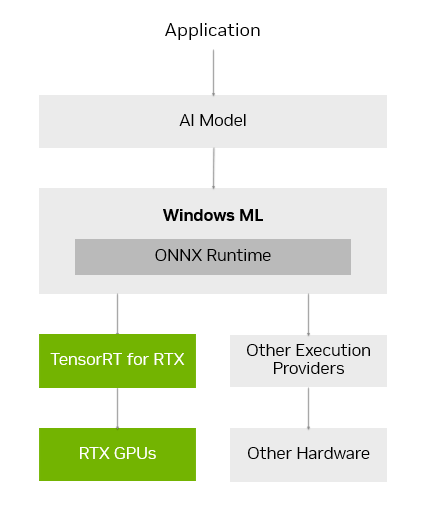

The customary AI PC software stack often forces developers to choose between performance and custom hardware optimizations. Windows ML, powered by ONNX Runtime, solves this by connecting to an optimized AI execution layer provided by hardware manufacturers.

The Performance Boost You Need

For geforce RTX GPUs, Windows ML automatically utilizes the TensorRT for RTX inference library. The result? Over 50% faster performance for AI workloads compared to DirectML. This means smoother, more responsive AI applications.

Expanding the AI Ecosystem on windows 11

NVIDIA offers a wide range of SDKs (Software Advancement Kits) to help developers integrate AI features and boost app performance. These include CUDA and TensorRT for GPU acceleration, DLSS and optix for 3D graphics, RTX Video and maxine for multimedia, and Riva and ACE for generative AI.

Real-World Examples of AI Integration

Leading applications are already releasing updates to leverage NVIDIA SDKs:

- LM Studio: Upgraded to the latest CUDA version, resulting in over 30% performance increase.

- Topaz Labs: Releasing a generative AI video model, accelerated by CUDA, to enhance video quality.

- Chaos Enscape and Autodesk VRED: Adding DLSS 4 for faster performance and improved image quality.

- Bilibili: Integrating NVIDIA Broadcast features like Virtual Background to enhance livestreams.

NIM Microservices and AI Blueprints: Democratizing AI Development

Getting started with AI development can be overwhelming. NVIDIA NIM simplifies the process by providing a curated list of prepackaged, optimized AI models that run seamlessly on RTX GPUs.

The Power of NIM Microservices

NIM microservices are containerized, allowing them to run seamlessly across PCs and the cloud. They are available for download through build.nvidia.com and integrated into popular AI apps like Anything LLM, ComfyUI, and AI Toolkit for Visual Studio Code.

AI Blueprints: Jumpstarting Your AI Projects

NVIDIA AI Blueprints offer sample workflows and projects using NIM microservices,providing a starting point for AI developers. Such as, the NVIDIA AI Blueprint for 3D-guided generative AI allows users to control image composition and camera angles using a 3D scene as a reference.

Project G-Assist: Your AI Assistant for RTX PCs

Project G-Assist, an experimental AI assistant integrated into the NVIDIA app, allows users to control their GeForce RTX system using voice and text commands. This offers a more intuitive interface compared to traditional control panels.

Building Your Own AI Assistant

Developers can use Project G-Assist to build plug-ins, test assistant use cases, and share them through NVIDIA’s Discord and GitHub communities. The Project G-Assist Plug-in Builder,a ChatGPT-based app,simplifies plug-in development with natural language commands.

Community-Driven Plug-Ins: Expanding Functionality

New open-source plug-in samples are available on GitHub, showcasing how on-device AI can enhance PC and gaming workflows:

- Gemini: Updated to include real-time web search capabilities.

- IFTTT: Automates tasks across hundreds of compatible endpoints, such as adjusting room lights or pushing gaming news to a mobile device.

- Discord: Shares game highlights or messages directly to Discord servers without disrupting gameplay.

G-Assist and Langflow: A Powerful Combination

Starting soon,G-Assist will be available as a custom component in Langflow,allowing users to integrate function-calling capabilities in low-code or no-code workflows,AI applications,and agentic flows.

The collaboration between NVIDIA and Microsoft is paving the way for a future where AI is seamlessly integrated into the PC experience, empowering users with new levels of creativity, productivity, and control. As AI technology continues to evolve, expect even more innovative applications and features to emerge, transforming the way we interact with our computers.

The AI Revolution is Here: NVIDIA adn Microsoft Redefine the PC Experience – An Expert Interview

Keywords: AI PC, NVIDIA RTX, Microsoft, TensorRT, Windows ML, AI Development, Project G-Assist, Generative AI, AI Inference, PC Performance

Time.news: The world of PCs is on the cusp of a major transformation, driven by AI. NVIDIA and Microsoft are collaborating to bring powerful AI capabilities directly to our desktops. To delve deeper into this revolution,we’re joined by Dr. anya Sharma, a leading expert in AI and computer architecture. Dr. Sharma, welcome!

Dr. Sharma: Thank you for having me. It’s an exciting time to be in this field.

time.news: Let’s jump right in. The article highlights “RTX AI PCs” as a key component of this transformation. What makes these PCs different from standard PCs?

dr. Sharma: The core difference lies in the hardware. NVIDIA RTX AI PCs are equipped with GPUs that are specifically designed to accelerate AI workloads. Think of it as having a specialized engine dedicated to AI tasks, allowing them to run much faster and more efficiently. Central to this is TensorRT,optimized for RTX AI PCs,which drastically reduces model size and allows for seamless deployment.

time.news: TensorRT for RTX seems instrumental.Can you explain its importance for AI inference and how it impacts performance?

Dr. Sharma: Absolutely. AI inference, the process of applying a trained AI model to new data, can be computationally intensive. Traditionally, developers often had to choose between performance and custom hardware optimizations. TensorRT for RTX addresses this by providing an optimized AI execution layer directly accessible through Windows ML.

This means that rather of relying solely on the CPU,AI applications can leverage the power of the RTX GPU’s Tensor Cores. The article mentions a 50% performance increase – and that’s a significant boost for AI-driven tasks like image recognition, video editing, and even gaming.

time.news: The article notes that Windows ML intelligently selects the best hardware for each AI task. how does this benefit end-users and developers?

Dr. Sharma: This clever hardware selection is crucial for both end-users and developers, it ensures that the AI task is executed in an optimized way. For the end-user, it translates to a smoother overall experience as applications are fast and more responsive. For developers, it greatly simplifies the development process sence they don’t have to manually manage resource allocation or tailor their applications to specific hardware configurations.

Time.news: NVIDIA also offers a range of SDKs to developers. Can you highlight the most impactful ones and how they facilitate AI integration into various applications?

Dr. Sharma: NVIDIA provides a extensive suite of SDKs, each tailored to specific areas. CUDA remains a foundational SDK for general-purpose GPU acceleration. For those working with graphics, DLSS for image quality is transformative. Multimedia creators benefit immensely from RTX Video and Maxine. What’s notably captivating is riva and ACE, which address generative AI, enabling developers to create truly interactive and intelligent applications. The examples provided in the article showcase the power of these SDKs in real-world applications.

Time.news: The article mentions NIM microservices and AI Blueprints, aiming to “democratize AI development.” What is the potential impact of these initiatives?

Dr. Sharma: Democratization is the key word here.One of the biggest barriers to AI development has always been the complexity of setting up the necessary infrastructure and working with intricate models. NVIDIA NIM microservices simplify this by providing pre-packaged, optimized AI models ready to run.

The AI Blueprints takes it a step further by offering sample workflows and projects, allowing developers to quickly prototype and build AI-powered features without starting from scratch. This substantially lowers the barrier to entry and encourages wider adoption of AI across different software and applications.

Time.news: Let’s talk about Project G-assist. The idea of an AI assistant for RTX PCs sounds intriguing. What potential do you see for such an assistant, and how might developers leverage it?

Dr. Sharma: Project G-Assist is more than just an assistant; it’s a gateway to a more intuitive user experience.Imagine controlling your PC, optimizing game settings, or troubleshooting issues simply by using voice commands. Project G-assist opens possibilities for a new kind of human-computer interaction.

For developers, it provides a platform to build plug-ins that extend the functionality of the assistant. As the article mentions, we see plug-ins for everything from unified lighting control to automated task management. The fact that NVIDIA is encouraging community-driven development through Discord and GitHub is a positive sign, as it fosters innovation and ensures a diverse range of use cases.

Time.news: What advice would you give to readers who are interested in exploring the potential of AI on their PCs, whether they are developers or end-users? Any practical tips?

Dr. Sharma: If you’re a developer, start by exploring NVIDIA’s sdks and the NIM microservices. Even if you’re new to AI, the pre-built models and blueprints can provide a solid foundation. For end-users, keep an eye out for applications that actively leverage NVIDIA’s AI capabilities. Look for the “RTX” badge, which indicates that the application is optimized for RTX AI PCs. Simply using these applications will give you a taste of the power of AI on your desktop. And don’t forget to explore the community-developed plug-ins for Project G-Assist onc that functionality becomes available.

Time.news: Dr. Sharma, thank you for sharing your insights. It’s clear that the collaboration between NVIDIA and Microsoft is set to reshape the PC landscape.

Dr. Sharma: My pleasure.The AI revolution is happening now, and it’s exciting to see how it will transform the way we interact with our computers.