Table of Contents

How far is too far when expressing political outrage online? The recent threats against the daughter of Italian Prime Minister Giorgia Meloni, and the daughters of Minister Matteo Piantedosi, have ignited a fierce debate about the boundaries of free speech and the responsibility of online platforms. This isn’t just an Italian problem; it’s a global issue,and the U.S. is no exception.

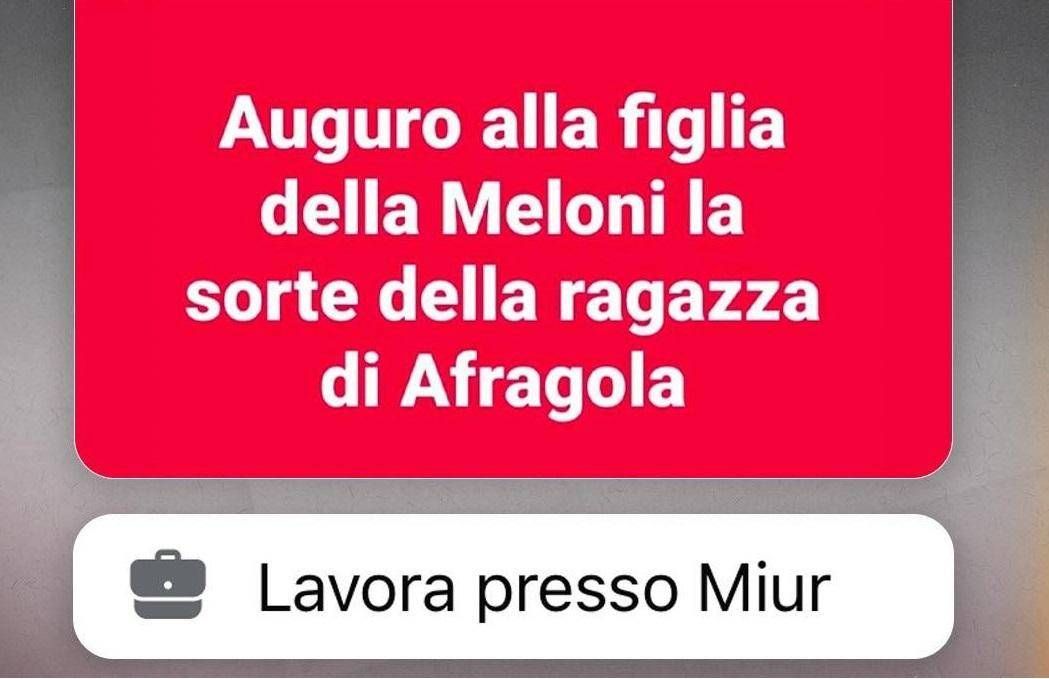

The Chilling Details

The original article details how a professor in the Nolano region of Italy was identified by postal police as the author of threats against Meloni’s daughter.The user wished the child “the fate of the girl of Afragola,” a chilling reference to a past tragedy. Similarly, threats were directed at the daughters of Minister Piantedosi, accusing him of stealing “money and food of our children.”

Echoes in the American Landscape

While the specifics are Italian, the underlying issue resonates deeply in the U.S. We’ve seen a surge in online harassment and threats targeting politicians, journalists, and even ordinary citizens. Remember the 2020 Michigan kidnapping plot against Governor Gretchen Whitmer, fueled by online rhetoric? Or the constant stream of threats directed at members of Congress? The digital world, while connecting us, has also become a breeding ground for extremism and hate.

Social media platforms are under increasing pressure to moderate content and prevent the spread of hate speech. But where do you draw the line? Is it censorship,or responsible community management? This is a question that American tech giants like Meta (Facebook,Instagram),X (formerly Twitter),and Google (YouTube) grapple with daily.

The Legal and Ethical Minefield

In the U.S., the First Amendment protects freedom of speech, but that protection isn’t absolute.Threats of violence are not protected speech. However,proving intent and establishing a credible threat can be challenging. This legal ambiguity makes it difficult to prosecute online harassers and hold them accountable for their actions.

the Impact on Public Discourse

The rise of online threats has a chilling effect on public discourse. People are afraid to speak their minds for fear of being targeted. This self-censorship undermines democracy and makes it harder to address critical issues. Consider the recent debates around school board meetings, where educators and board members have faced intense harassment and threats, leading some to resign.

Potential Future Developments

So, what does the future hold? Here are a few potential developments:

We could see increased government regulation of social media platforms, both in the U.S. and internationally. This could include stricter content moderation policies, requirements for identifying and removing hate speech, and greater transparency about algorithms that amplify harmful content. The European Union’s Digital Services Act (DSA) is a prime example of this trend, and it could influence future legislation in the U.S.

2. Enhanced AI-Powered Moderation

Social media companies will likely invest more heavily in AI-powered moderation tools to detect and remove hate speech and threats. However, these tools are not perfect, and they can sometimes make mistakes, leading to accusations of censorship and bias. The challenge will be to develop AI that is both effective and fair.

3. Stricter Enforcement of Existing Laws

Law enforcement agencies may become more proactive in investigating and prosecuting online threats. This could involve increased training for law enforcement officers on how to identify and respond to online harassment, as well as greater collaboration between law enforcement agencies and social media companies.

Ultimately, addressing the problem of online threats will require a shift in social norms. We need to create a culture where online harassment is not tolerated and where people are held accountable for their words and actions. This will require education, awareness campaigns, and a willingness to challenge hateful rhetoric whenever and wherever it appears.

The Path Forward

The threats against the daughters of Giorgia Meloni and Matteo Piantedosi are a stark reminder of the dangers of online hate. while the solutions are complex and multifaceted, one thing is clear: we cannot afford to ignore this problem. The future of our democracy depends on it.

When Online Hate Turns Real: A Discussion with Digital Safety Expert Dr. Anya Sharma

Target Keywords: Online Hate, Social Media Threats, Cyberbullying, Free Speech, Content Moderation, Digital Safety, Online Harassment, Section 230, Giorgia Meloni

Time.news Editor: Dr. Sharma, thank you for joining us. The recent threats against the daughters of Italian Prime Minister Giorgia Meloni and Minister Matteo Piantedosi have sparked a global conversation about online hate and its real-world consequences. How important is this issue, and is it unique to Italy?

Dr.Anya Sharma: Thank you for having me. While the specifics of the Italian case are certainly alarming, the core issue of social media threats translating into real-world fear and intimidation is a global one. We see echoes of this everywhere, including here in the U.S. Think about the online rhetoric that fueled the Michigan kidnapping plot against Governor Whitmer or the constant barrage of threats aimed at public officials. The internetS anonymity can embolden individuals to express hateful views and issue threats they might never voice in person.

Time.news Editor: The article highlights the role of social media platforms in this problem. What duty do tech giants like Meta,X,and Google have in curbing the spread of online harassment?

Dr. Anya Sharma: They have a significant responsibility. These platforms are the town squares of the 21st century, and they need to ensure a safe and civil environment. This involves actively moderating content, notably hate speech and threats of violence, but it also requires thoughtful consideration of free speech principles. The challenge is finding the right balance between protecting expression and preventing harm.

Time.news Editor: Content moderation is a complex issue.Where do you draw the line between censorship and responsible community management?

Dr. Anya Sharma: That’s the million-dollar question. A good starting point is to focus on content that violates existing laws, such as direct threats of violence. But it goes beyond that. Platforms also need to address content that incites violence or promotes hate, even if it doesn’t directly violate a law. Transparency is key. Users should understand why content is removed and have a clear process for appealing those decisions.

Time.news Editor: The article mentions Section 230 of the Communications Decency Act and its role in protecting social media companies. Is this protection still warranted in its current form?

dr. Anya Sharma: Section 230 is a double-edged sword. It shields platforms from liability for user-generated content, which allows them to foster online communities. However, it also makes it difficult to hold them accountable for failing to address harmful content. There’s a growing debate about whether Section 230 should be reformed to encourage platforms to be more proactive in moderating content without stifling innovation or free expression.

Time.news Editor: The article touches on the legal challenges of prosecuting cyberbullying and online hate. Can you elaborate on those challenges?

Dr. Anya Sharma: In the U.S., the first Amendment provides broad protection for freedom of speech, but that protection doesn’t extend to true threats. However, proving intent and establishing a credible threat in the online world can be incredibly difficult. It requires law enforcement to investigate anonymous accounts, gather digital evidence, and demonstrate that the threat was genuinely alarming to the target. this often demands expertise and resources that many law enforcement agencies lack.

Time.news Editor: what impact does this pervasive online hate have on public discourse?

Dr. Anya Sharma: It has a chilling effect. People become afraid to voice their opinions, especially on controversial topics, for fear of being targeted by harassment or threats. this self-censorship stifles debate and makes it harder to address critical issues. It’s a real threat to democracy.

Time.news Editor: The article outlines several potential future developments, including increased regulation, enhanced AI moderation, stricter enforcement, and a shift in social norms. Which of these do you see as most promising?

Dr. Anya Sharma: They are all crucial and interconnected. Increased regulation, like the EU’s Digital Services act, can set clearer standards for platforms. Enhanced AI moderation can help automate the detection and removal of harmful content,but it requires careful oversight to avoid bias and errors. Stricter enforcement of existing laws can hold individuals accountable for their online actions.But ultimately, we need a cultural shift where online harassment is seen as unacceptable behavior, and individuals are empowered to challenge hateful rhetoric.

Time.news Editor: What advice would you give our readers who are concerned about the rise of social media threats and online harassment?

Dr. anya Sharma: First, be aware of your own digital footprint and privacy settings. Understand how your information is being shared online and take steps to protect yourself. Second, report harassment and threats to the platform where they occur.Most platforms have clear reporting mechanisms. Third, support organizations that are working to combat online hate and promote digital safety. and be an active bystander. If you see someone being harassed online, speak up and offer your support. Challenge hateful rhetoric and help create a more positive online environment. Knowledge is power when it comes to confronting digital safety.

Time.news Editor: Dr. Sharma, this has been incredibly insightful. Thank you for sharing your expertise with us.