NVIDIA Ushers in a New Era of Robotics with Breakthrough Physical AI Platform

Table of Contents

- NVIDIA Ushers in a New Era of Robotics with Breakthrough Physical AI Platform

- The Three Pillars of NVIDIA’s Robotics Solution

- What is Physical AI and Why is it Significant?

- The Expanding Universe of Robotics

- Humanoid Robots: The Next Frontier

- How NVIDIA’s Computers Work in Harmony

- Digital Twins: Accelerating Robotic Development

- Industry Adoption of NVIDIA’s Platform

- The Future of Physical AI Across Industries

The convergence of artificial intelligence and robotics is no longer a futuristic vision, but a rapidly unfolding reality, powered by NVIDIA’s comprehensive platform for physical AI.

The embodiment of artificial intelligence in robots, visual AI agents, and autonomous systems is experiencing a pivotal moment. To accelerate development in sectors like transportation, manufacturing, logistics, and robotics, NVIDIA has introduced three interconnected computers designed to advance the training, simulation, and deployment of physical AI systems.

The Three Pillars of NVIDIA’s Robotics Solution

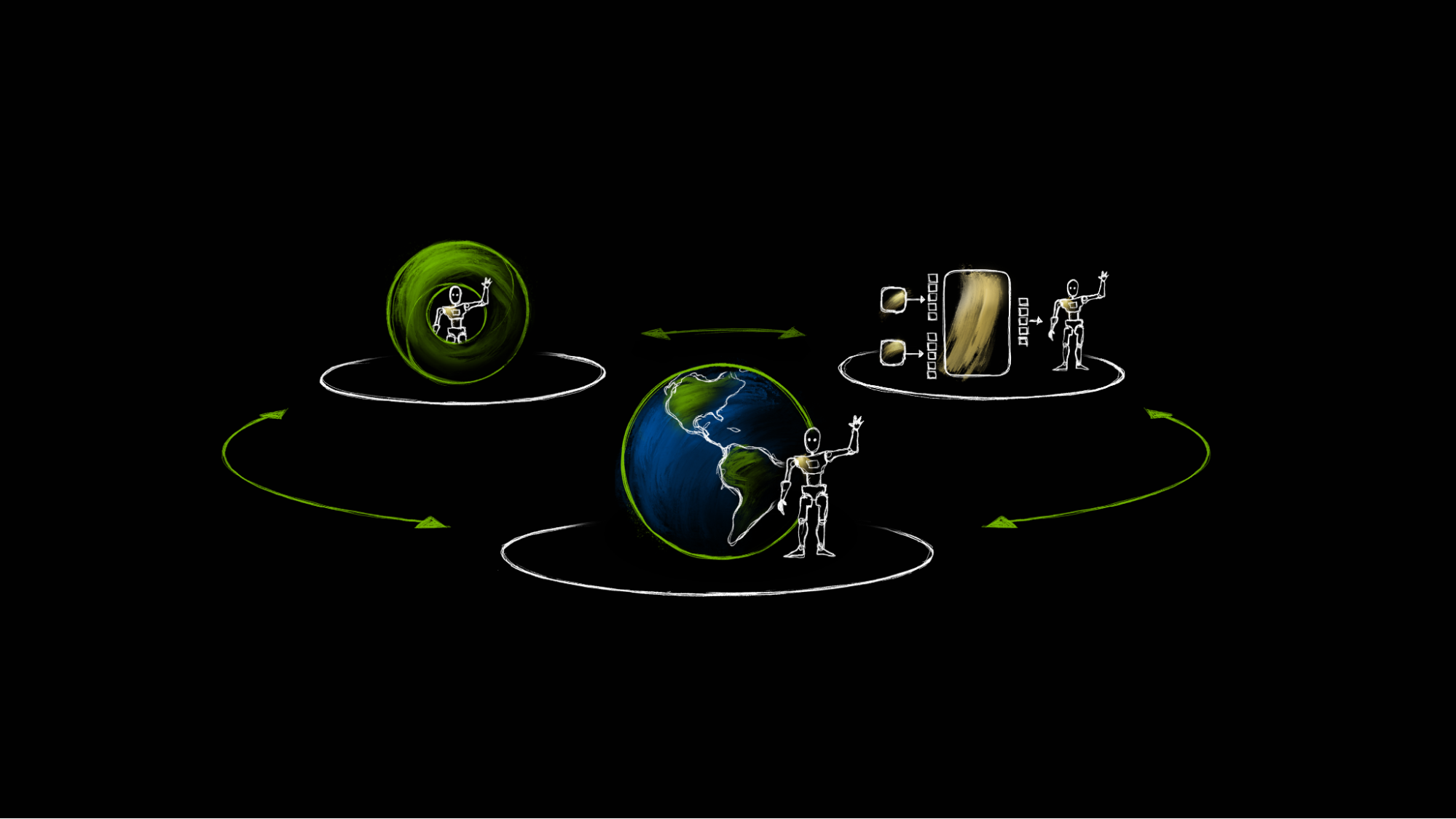

NVIDIA’s approach centers around a three-computer solution: the NVIDIA DGX AI supercomputers for AI training, NVIDIA Omniverse and Cosmos on NVIDIA RTX PRO Servers for simulation, and the NVIDIA Jetson AGX Thor for on-robot inference. This architecture, according to a company release, provides a complete development pipeline, from initial model training to real-world deployment.

What is Physical AI and Why is it Significant?

Unlike agentic AI, which operates within digital environments, physical AI models are designed to perceive, reason, interact with, and navigate the physical world. For six decades, software development relied on “Software 1.0”—code written by human programmers on CPUs. However, a landmark moment arrived in 2012 when Alex Krizhevsky, mentored by Ilya Sutskever and Geoffrey Hinton, won the ImageNet competition with AlexNet, a revolutionary deep learning model.

“This marked the industry’s first contact with AI,” one analyst noted, signaling the beginning of “Software 2.0”—neural networks running on GPUs. Today, the trend has accelerated, with software increasingly writing software, and computing workloads shifting towards accelerated computing on GPUs, surpassing the limitations of Moore’s Law.

While generative AI has excelled in creating responses through large language and image models, these models struggle to comprehend the complexities of the 3D world. This is where physical AI steps in, bridging the gap between digital intelligence and real-world application.

The Expanding Universe of Robotics

A robot, at its core, is a system capable of perceiving, reasoning, planning, acting, and learning. While often visualized as autonomous mobile robots (AMRs), manipulator arms, or humanoid robots, the scope of robotic embodiments is far broader. The near future promises a world where nearly everything that moves, or monitors movement, will be an autonomous robotic system, capable of sensing and responding to its environment.

From autonomous vehicles and surgical rooms to data centers, warehouses, factories, and even entire smart cities, static, manually operated systems are poised to transform into dynamic, interactive systems powered by physical AI.

Humanoid Robots: The Next Frontier

Humanoid robots are emerging as a particularly promising area of development. Their ability to operate efficiently in human-designed environments with minimal adaptation makes them an ideal general-purpose robotic form. According to Goldman Sachs, the global market for humanoid robots is projected to reach $38 billion by 2035—a more than sixfold increase from the roughly $6 billion forecast just two years ago. Researchers and developers worldwide are actively competing to build the next generation of these advanced robots.

How NVIDIA’s Computers Work in Harmony

Robots learn to understand the physical world through three distinct types of computational intelligence, each playing a crucial role in the development process.

1. Training Computer: NVIDIA DGX

Training robots to understand natural language, recognize objects, and plan complex movements requires immense computational power. The NVIDIA DGX platform provides the specialized supercomputing infrastructure necessary for this task. Developers can leverage pre-trained robot foundation models, such as NVIDIA Cosmos open world foundation models or NVIDIA Isaac GR00T humanoid robot foundation models, or train their own custom models.

2. Simulation and Synthetic Data Generation Computer: NVIDIA Omniverse with Cosmos on NVIDIA RTX PRO Servers

A significant challenge in robotics development is the scarcity of real-world data. Unlike large language model research, which benefits from the vastness of internet data, physical AI lacks a comparable resource. Real-world data collection is costly, time-consuming, and often insufficient for addressing edge cases.

To overcome this hurdle, developers can utilize NVIDIA Omniverse and Cosmos to generate massive amounts of physically based, synthetic data—including 2D/3D images, segmentation maps, depth data, and motion trajectories—to accelerate model training and improve performance.

Furthermore, Isaac Sim, built on Omniverse libraries and running on NVIDIA RTX PRO Servers, allows developers to test robot policies in safe, digital twin environments, enabling repeated attempts and learning from mistakes without risking damage or endangering human safety. NVIDIA Isaac Lab, an open-source robot learning framework, further accelerates policy training through reinforcement and imitation learning.

3. Runtime Computer: NVIDIA Jetson Thor

Safe and effective deployment demands a computer capable of real-time autonomous operation, processing sensor data, reasoning, planning, and executing actions within milliseconds. The NVIDIA Jetson AGX Thor delivers this performance in a compact, energy-efficient design, supporting multimodal AI reasoning models that enable robots to interact intelligently with people and the physical world.

Digital Twins: Accelerating Robotic Development

The culmination of these technologies results in advanced robotic facilities. Manufacturers like Foxconn and logistics companies like Amazon Robotics are already orchestrating teams of autonomous robots alongside human workers, monitoring operations through extensive sensor networks.

These facilities will increasingly rely on digital twins—virtual replicas of physical spaces—for layout planning, optimization, and, crucially, robot fleet software-in-the-loop testing. NVIDIA Mega, built on Omniverse, serves as a blueprint for these factory digital twins, allowing enterprises to test and optimize robot fleets in simulation before deployment, ensuring seamless integration and minimizing disruption.

Mega enables developers to populate digital twins with virtual robots and their AI models, simulating tasks through perception, reasoning, planning, and action. The results are fed back through sensor simulations, allowing the robot brains to continuously refine their actions. This advanced testing process helps anticipate and mitigate potential issues, reducing risk and costs during real-world deployment.

Industry Adoption of NVIDIA’s Platform

NVIDIA’s three-computer approach is already gaining traction among robotics developers and foundation model builders worldwide.

Universal Robots, a Teradyne Robotics company, utilized NVIDIA Isaac Manipulator, Isaac-accelerated libraries, AI models, and NVIDIA Jetson to create UR AI Accelerator, a toolkit designed to accelerate cobot application development. RGo Robotics leveraged NVIDIA Isaac Perceptor to enhance the perception and decision-making capabilities of its wheel.me AMRs.

Leading humanoid robot manufacturers—including 1X Technologies, Agility Robotics, Apptronik, Boston Dynamics, Fourier, Galbot, Mentee, Sanctuary AI, Unitree Robotics, and XPENG Robotics—are adopting NVIDIA’s robotics development platform. Boston Dynamics is utilizing Isaac Sim and Isaac Lab for quadruped development and Jetson Thor for humanoid robots, while Fourier is leveraging Isaac Sim to train humanoid robots for applications in scientific research, healthcare, and manufacturing. Galbot has advanced dexterous grasp datasets using Isaac Lab and Isaac Sim, and employs Jetson Thor for real-time control. Field AI is developing risk-bounded foundation models for outdoor robotic operations using the Isaac platform and Isaac Lab.

The Future of Physical AI Across Industries

As robotics use cases expand across global industries, NVIDIA’s three-computer approach to physical AI holds immense potential to enhance human work in manufacturing, logistics, service, and healthcare.

Explore NVIDIA’s robotics platform to get started with training, simulation, and deployment tools for physical AI.