Enterprises are discovering that simply bolting retrieval systems onto large language models isn’t enough—it’s become a critical infrastructure dependency, and failures can quickly translate into real-world business risks.

RAG’s Growing Pains: Why Retrieval is Now Foundational

Table of Contents

As AI systems take on more responsibility, the reliability of the information they access is paramount.

- Early Retrieval-Augmented Generation (RAG) systems were designed for limited use cases and assumed stable data.

- Modern AI demands retrieval systems that handle constantly changing data, complex reasoning, and autonomous workflows.

- Freshness, governance, and evaluation are no longer optional features but essential architectural concerns.

- Treating retrieval as infrastructure—rather than application logic—is key to building reliable and scalable AI systems.

Initially, Retrieval-Augmented Generation (RAG) implementations focused on straightforward tasks like document search and internal question answering. These systems operated within tightly defined boundaries, assuming relatively static information and human oversight. But those assumptions are rapidly crumbling.

The Challenges of Scaling RAG

Today’s enterprise AI systems are increasingly reliant on several factors that expose the limitations of traditional RAG approaches:

- Continuously changing data sources

- Multi-step reasoning across diverse domains

- Agent-driven workflows that autonomously retrieve context

- Strict regulatory and audit requirements for data usage

In these complex environments, even a single outdated index or improperly configured access policy can have cascading consequences. Treating retrieval as a mere enhancement to inference obscures its growing role as a significant source of systemic risk.

Freshness: A Systems-Level Problem

Failures related to outdated information rarely stem from the embedding models themselves. The root cause typically lies within the surrounding system. Many enterprise retrieval stacks struggle to answer fundamental operational questions:

- How quickly do changes to source data propagate into indexes?

- Which applications are still querying outdated information?

- What guarantees exist regarding data consistency during mid-session updates?

Mature platforms enforce data freshness through explicit architectural mechanisms—such as event-driven reindexing, versioned embeddings, and retrieval-time awareness of data staleness—rather than relying on periodic rebuilds.

The recurring pattern across deployments is that freshness issues arise when source systems are updated continuously while indexing and embedding pipelines lag behind, leaving consumers unknowingly operating on stale context. Because the system still generates fluent, plausible responses, these gaps often go unnoticed until autonomous workflows depend on retrieval, and reliability problems emerge at scale.

Governance: Extending Control to the Retrieval Layer

Most enterprise governance models were designed to manage data access and model usage independently. Retrieval systems occupy an awkward space between the two, creating potential vulnerabilities.

Ungoverned retrieval introduces several risks:

- Models accessing data outside their authorized scope

- Sensitive information leaking through embeddings

- Agents retrieving information they are not permitted to act upon

- Inability to trace the data used to inform a decision

In retrieval-centric architectures, governance must operate at semantic boundaries—not just at the storage or API layers. This requires policy enforcement tied to queries, embeddings, and downstream consumers, not simply datasets.

Effective retrieval governance typically includes:

- Domain-scoped indexes with clear ownership

- Policy-aware retrieval APIs

- Audit trails linking queries to retrieved artifacts

- Controls on cross-domain retrieval by autonomous agents

Without these controls, retrieval systems can inadvertently bypass safeguards organizations believe are in place.

Evaluation: Beyond Answer Quality

Traditional RAG evaluation focuses primarily on the correctness of responses. This is insufficient for enterprise-grade systems.

Retrieval failures often manifest upstream of the final answer:

- Irrelevant, yet plausible, documents being retrieved

- Critical context being missed

- Outdated sources being overrepresented

- Authoritative data being silently excluded

As AI systems become more autonomous, teams must evaluate retrieval as an independent subsystem. This includes measuring recall under policy constraints, monitoring freshness drift, and detecting bias introduced by retrieval pathways.

In production environments, evaluation often breaks down once retrieval becomes autonomous. Teams continue to assess answer quality on sampled prompts but lack visibility into what was retrieved, what was missed, or whether stale or unauthorized context influenced decisions. As retrieval pathways evolve dynamically, silent drift accumulates, and issues are often misattributed to model behavior rather than the retrieval system itself.

Evaluation that ignores retrieval behavior leaves organizations blind to the true causes of system failures.

A New Architecture: Retrieval as Infrastructure

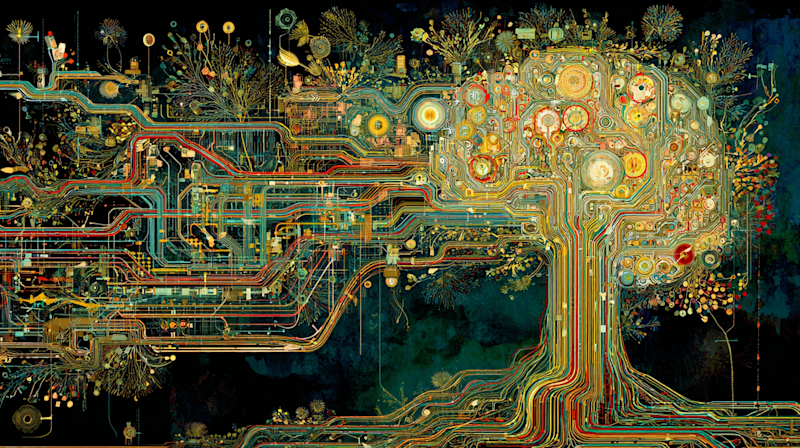

Retrieval as infrastructure — A reference architecture illustrating how freshness, governance, and evaluation function as first-class system planes rather than embedded application logic. Conceptual diagram created by the author.

A retrieval system designed for enterprise AI typically consists of five interdependent layers:

- Source ingestion layer: Handles structured, unstructured and streaming data with provenance tracking.

- Embedding and indexing layer: Supports versioning, domain isolation and controlled update propagation.

- Policy and governance layer: Enforces access controls, semantic boundaries, and auditability at retrieval time.

- Evaluation and monitoring layer: Measures freshness, recall and policy adherence independently of model output.

- Consumption layer: Serves humans, applications and autonomous agents with contextual constraints.

This architecture treats retrieval as shared infrastructure rather than application-specific logic, enabling consistent behavior across use cases.

The Future of Reliable AI

As enterprises move toward agentic systems and long-running AI workflows, retrieval becomes the foundation upon which reasoning depends. Models can only be as reliable as the context they are given.

Organizations that continue to treat retrieval as an afterthought will face:

- Unexplained model behavior

- Compliance gaps

- Inconsistent system performance

- Erosion of stakeholder trust

Those that elevate retrieval to an infrastructure discipline—governed, evaluated, and engineered for change—will build a foundation that scales with both autonomy and risk.

Conclusion

Retrieval is no longer a supporting feature of enterprise AI systems; it is infrastructure.

Freshness, governance, and evaluation are not optional optimizations; they are prerequisites for deploying AI systems that operate reliably in real-world environments. As organizations move beyond experimental RAG deployments toward autonomous and decision-support systems, the architectural treatment of retrieval will increasingly determine success or failure.

Enterprises that recognize this shift early will be better positioned to scale AI responsibly, withstand regulatory scrutiny, and maintain trust as systems grow more capable—and more consequential.

Varun Raj is a cloud and AI engineering executive specializing in enterprise-scale cloud modernization, AI-native architectures, and large-scale distributed systems.

Welcome to the VentureBeat community!

Our guest posting program is where technical experts share insights and provide neutral, non-vested deep dives on AI, data infrastructure, cybersecurity and other cutting-edge technologies shaping the future of enterprise.

Read more from our guest post program — and check out our guidelines if you’re interested in contributing an article of your own!