teh brain’s Dynamic Vision: How Top-Down Processing is Rewriting the Rules of Perception

Table of Contents

- teh brain’s Dynamic Vision: How Top-Down Processing is Rewriting the Rules of Perception

- The Illusion of Stability: How Your Brain Actively Constructs Reality

- Implications for Autism: A New Perspective on Perceptual Differences

- The Broader Impact: Rewriting the Textbook on Brain Function

- FAQ: Decoding the Dynamic Brain

- Pros and Cons: The Dynamic brain Revolution

- Conclusion: A New Era of Brain Science

- Rewriting Vision: An Expert Q&A on Top-Down Processing and the Dynamic Brain

What if everything you thought you knew about how your brain processes vision was wrong? New research is turning the traditional “bottom-up” view of visual processing on its head, revealing a dynamic system where your brain actively anticipates and interprets what you see, moment by moment. this “top-down” influence isn’t just a minor tweak; it’s a fundamental shift that could revolutionize our understanding of perception and pave the way for new treatments for brain disorders like autism.

The Illusion of Stability: How Your Brain Actively Constructs Reality

From the moment we open our eyes, our brains are bombarded with visual data. We effortlessly assemble these fragments into a coherent, recognizable world. But this seamless experience is an illusion, a testament to the brain’s astonishing ability to predict, interpret, and adapt. The old model suggested a rigid hierarchy: simple features detected in the primary visual cortex (V1) are progressively combined into more complex representations as information flows “up” to higher brain regions. Think of it like an assembly line, where each station performs a specific, unchanging task.

However, groundbreaking research from the lab of Charles D. Gilbert at Rockefeller University is revealing a far more dynamic and interactive process. Their work, recently published in PNAS, demonstrates that neurons in the visual cortex aren’t just passive receivers; they’re active participants, constantly adjusting their responsiveness based on prior experiences and current task demands. This is the essence of “top-down” processing: higher brain regions sending contextual information back down the visual pathway, shaping how we perceive the world.

Challenging the Classical View: Neurons That Adapt on the Fly

The classical view held that neurons in the early visual cortex were specialized for detecting simple features like lines and edges. Complexity, it was believed, increased as information moved up the hierarchy. Gilbert’s research challenges this notion, showing that even neurons in V1 can dynamically alter their functional properties. They can switch their inputs, prioritizing task-relevant information and filtering out distractions. This plasticity, the brain’s ability to reorganize itself, is key to understanding how we learn and adapt to a constantly changing environment.

This dynamic tuning is facilitated by feedback connections, neural pathways that run from higher cortical areas back to lower ones.These connections aren’t just passive conduits; they actively influence the activity of neurons in the visual cortex. Imagine a conductor leading an orchestra: the higher-level areas provide the instructions, and the lower-level areas execute them, constantly adjusting their performance based on the conductor’s cues.

The Macaque study: Unveiling the Brain’s predictive Power

To investigate this dynamic processing,Gilbert’s team trained macaques to recognize a variety of objects,from fruits and vegetables to tools and machines. Using fMRI,they identified brain regions that responded to these visual stimuli. Then, they implanted electrode arrays to record the activity of individual neurons as the monkeys performed a “delayed match-to-sample” task.In this task, the monkeys were shown an object, followed by a delay, and then presented with a series of images. Their job was to indicate whether the second image matched the original object.

The results were striking. The researchers found that a single neuron could be more responsive to one target in one context and more responsive to a different target in another context. This demonstrated that these neurons are not fixed in their function but are rather “adaptive processors” that change on a moment-to-moment basis, taking on different roles depending on the task at hand.

The Role of Working Memory: Holding the World in Mind

The delayed match-to-sample task also highlighted the crucial role of working memory. While the monkeys searched for a match, they had to hold the original image in their minds. This active maintenance of information allows the brain to compare incoming sensory data with stored representations, enabling us to recognize objects even when they are partially obscured or presented from different angles.

Beyond Simple Features: Early Visual Neurons as dynamic Interpreters

perhaps the most surprising finding was that neurons in the early visual cortex, traditionally thought to be limited to processing simple features, were capable of much more. they could dynamically adjust their responses based on the task and the context, demonstrating that even the earliest stages of visual processing are influenced by top-down signals.

Implications for Autism: A New Perspective on Perceptual Differences

The implications of this research extend far beyond our understanding of normal visual perception. Gilbert believes that top-down interactions are central to all brain functions, including other senses, motor control, and higher-order cognitive processes. Understanding the cellular and circuit basis for these interactions could provide new insights into the mechanisms underlying brain disorders like autism.

Individuals with autism often experience the world differently, with heightened sensitivity to sensory stimuli and difficulties with social interaction. These differences may be related to altered feedback mechanisms in the brain. By studying animal models of autism, Gilbert’s lab hopes to identify perceptual differences and the underlying cortical circuits that contribute to these differences.

The Future of Autism Research: Targeting Feedback Mechanisms

Will Snyder, a research specialist in Gilbert’s lab, is leading the charge in investigating perceptual differences between autism-model mice and their wild-type littermates.Using advanced neuroimaging technologies at the Elizabeth R. Miller Brain Observatory, the lab will observe large neuronal populations in the animals’ brains as they engage in natural behaviors. The goal is to identify specific differences in cortical circuits that may underlie the perceptual differences observed in autism.

This research could pave the way for new therapies that target feedback mechanisms in the brain, potentially alleviating some of the sensory and cognitive challenges faced by individuals with autism. Imagine a future where personalized interventions are tailored to address specific imbalances in top-down and bottom-up processing, leading to improved sensory integration and social communication skills.

The Broader Impact: Rewriting the Textbook on Brain Function

The shift towards a more dynamic and interactive view of brain function has profound implications for neuroscience as a whole. It challenges the traditional “assembly line” model, suggesting that the brain is more like a dynamic network, constantly adapting and reorganizing itself based on experience. This new perspective could revolutionize our understanding of learning, memory, and cognition.

Moreover, understanding top-down processing could lead to new advances in artificial intelligence. Current AI systems are largely based on feedforward models, mimicking the traditional view of brain function. By incorporating feedback mechanisms and dynamic learning algorithms,we could create AI systems that are more flexible,adaptable,and capable of handling complex,real-world tasks.

The Rise of Computational Neuroscience: Modeling the Brain’s Complexity

Computational neuroscience is playing an increasingly crucial role in unraveling the mysteries of the brain. by creating computer models of neural circuits, researchers can simulate brain activity and test hypotheses about how different brain regions interact. These models can definitely help us understand how feedback mechanisms contribute to perception, cognition, and behavior.

For example, researchers are using deep learning models to investigate feedback mechanisms in the visual cortex [[3]]. These models can be trained to perform visual tasks, and then analyzed to see how they use feedback connections to improve their performance. This approach can provide valuable insights into the functional role of feedback in the brain.

The Future of brain-Computer Interfaces: Harnessing Top-Down Control

Brain-computer interfaces (BCIs) are devices that allow us to communicate with and control external devices using our brain activity. Current BCIs are largely based on decoding motor commands, allowing paralyzed individuals to control prosthetic limbs or computer cursors. However, understanding top-down processing could lead to new bcis that can tap into higher-level cognitive functions.

Imagine a BCI that can detect your intentions and goals, and then use that information to guide your actions. This could be used to improve learning, enhance creativity, or even treat mental disorders. By harnessing the power of top-down control, we could unlock new possibilities for human-machine interaction.

FAQ: Decoding the Dynamic Brain

Top-down processing is a cognitive process where our brains use prior knowledge, expectations, and context to interpret incoming sensory information. It’s like having a mental framework that helps us make sense of the world around us.

Bottom-up processing starts with sensory input and builds up to a complete perception. Top-down processing, on the other hand, starts with our existing knowledge and uses it to interpret sensory input. They work together to create our perception of reality.

The primary visual cortex (V1) is the first cortical area to receive visual information from the retina. It’s responsible for processing basic visual features like lines, edges, and colors.

feedback modulation allows higher-level brain regions to influence the activity of neurons in V1. This can change how these neurons respond to visual stimuli, making them more or less sensitive to certain features depending on the context and task demands.

This research suggests that altered feedback mechanisms in the brain may contribute to the sensory and cognitive differences observed in autism. By understanding these mechanisms, we may be able to develop new therapies that target these imbalances.

Pros and Cons: The Dynamic brain Revolution

Pros

- Deeper Understanding of Brain Function: Provides a more nuanced and accurate model of how the brain processes information.

- New Avenues for Treating Brain Disorders: Opens up new possibilities for developing therapies that target feedback mechanisms in disorders like autism.

- advancements in Artificial Intelligence: Could lead to more flexible and adaptable AI systems.

- Improved Brain-Computer Interfaces: May enable the development of BCIs that can tap into higher-level cognitive functions.

Cons

- Complexity: The brain is incredibly complex, and understanding feedback mechanisms is a daunting task.

- Ethical Considerations: As we develop new technologies that can manipulate brain activity, we must consider the ethical implications.

- Long Research Timeline: Translating basic research findings into clinical applications can take many years.

- Potential for Misinterpretation: Oversimplifying complex brain processes can lead to inaccurate conclusions.

Conclusion: A New Era of Brain Science

The discovery that the brain’s visual cortex is a dynamic and adaptive system is a game-changer. It challenges long-held assumptions about how we perceive the world and opens up exciting new avenues for research and treatment. As we continue to unravel the mysteries of top-down processing, we can expect to see even more groundbreaking discoveries that will transform our understanding of the brain and its remarkable capabilities. The future of brain science is dynamic, interactive, and full of potential.

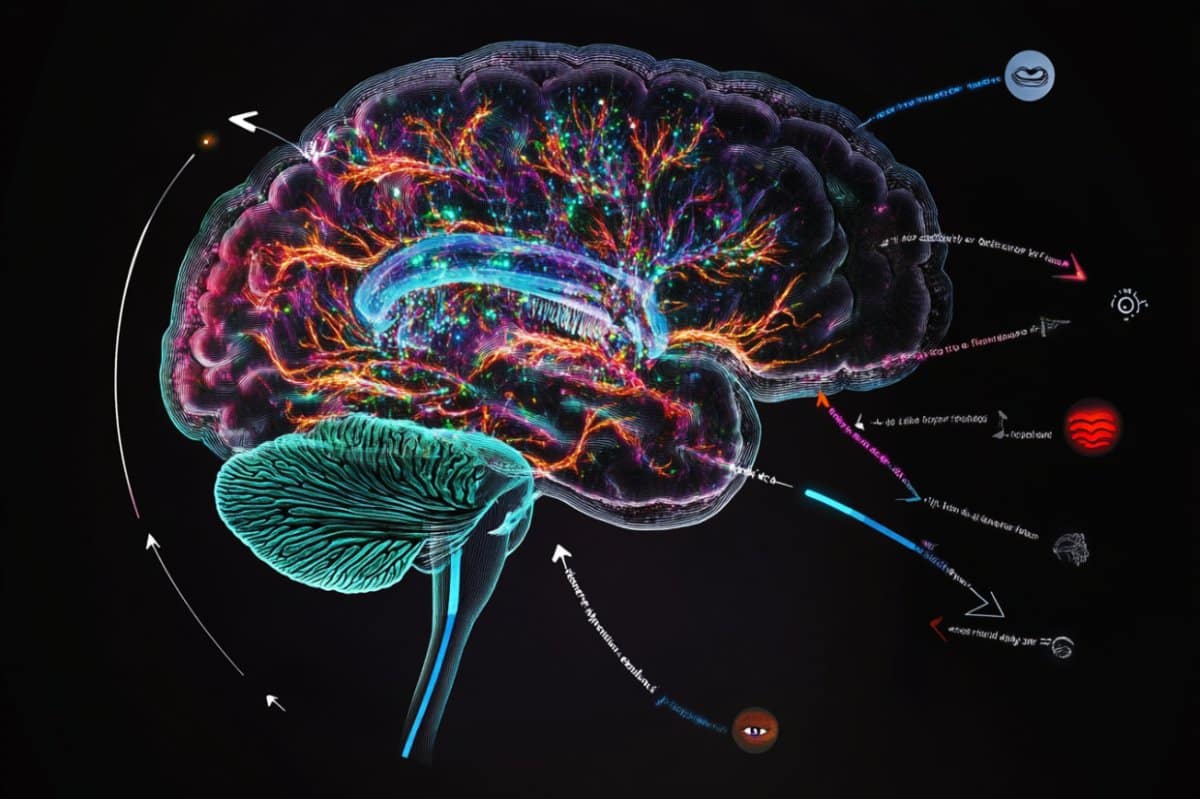

Suggested Image: A visually striking image of the brain with arrows indicating both feedforward and feedback connections, perhaps with different colors to distinguish them. alt tag: “Brain with feedforward and feedback connections illustrating dynamic visual processing.”

Suggested video: A short animated video explaining top-down vs. bottom-up processing in the visual cortex. This could be embedded from YouTube or created specifically for the article.

Rewriting Vision: An Expert Q&A on Top-Down Processing and the Dynamic Brain

Key Words: top-down processing, visual cortex, brain function, autism, perception, neuroscience, brain-computer interfaces, artificial intelligence

The way we perceive the world has long been attributed to a “bottom-up” process, where our brains passively recieve and assemble visual details. But what if our brains are actively shaping what we see, moment by moment? Groundbreaking research suggests that “top-down processing” – where our prior knowlege and expectations influence perception – plays a much larger role than previously thought. We sat down with Dr. Anya Sharma, a leading cognitive neuroscientist, to delve into this engaging field.

Time.news: Dr. sharma, thanks for joining us. Let’s start with the basics. Can you explain what top-down processing is in the context of visual perception?

Dr. Sharma: Absolutely. Traditionally, the visual system was viewed as a hierarchical assembly line . Simple features are detected in the primary visual cortex (V1), then combined into more complex representations as information flows “up” to higher brain regions [[2]]. Top-down processing flips this. It’s where higher-level brain areas,loaded with our memories,experiences,and even our current goals,send signals back down to these early visual areas,influencing how we interpret the incoming sensory information. It’s like having a mental filter that prioritizes and interprets what we see based on what we already know [[1]].

Time.news: The article highlights research showing that even neurons in V1, traditionally considered simple feature detectors, are influenced by top-down signals.How meaningful is this?

Dr. Sharma: This is a really big deal. It challenges the long-held assumption that V1 neurons are fixed in their function. The research suggests they are more like “adaptive processors,” constantly adjusting their responsiveness based on the context and task at hand. This plasticity,this ability of the brain to reorganize itself,is key to understanding how we learn and adapt. It means the early visual cortex isn’t just passively receiving; it’s actively participating in constructing our visual reality. These neurons can switch inputs, prioritizing task-relevant information and filtering out distractions.

Time.news: The research also mentions the role of working memory. How does that fit into this dynamic view of visual processing?

Dr.Sharma: Working memory is like your brain’s short-term holding area. In a visual task, like the “delayed match-to-sample” task described in the article, you need to hold the original image in your mind while searching for a match. This active maintenance of information allows the brain to compare incoming sensory data with stored representations. It’s why we can recognize objects even when they are partially obscured or presented from different angles. Working memory provides the “context” that allows top-down processing to effectively shape our perception.

Time.news: The article emphasizes the potential implications for understanding and treating autism. Can you elaborate on that?

Dr. sharma: Absolutely. individuals with autism often experience the world differently, sometimes with heightened sensitivity to sensory stimuli. This research suggests that these differences may be related to altered feedback mechanisms in the brain – a disruption in the delicate balance between top-down and bottom-up processing. By studying animal models of autism and using advanced neuroimaging, researchers hope to identify specific differences in cortical circuits that might underlie these perceptual differences. This could pave the way for new therapies that target these imbalances, potentially alleviating some of the sensory and cognitive challenges faced by individuals with autism.

Time.news: Besides autism, what other brain disorders might benefit from this deeper understanding of top-down processing?

Dr. Sharma: The principles of top-down processing are fundamental to many cognitive functions. Dysregulation of these processes could be implicated in a range of conditions, including schizophrenia, ADHD, and even certain types of anxiety disorders. Any condition where sensory processing or cognitive control is disrupted could potentially be better understood through this lens.

Time.news: The article also touches on the potential for advancements in artificial intelligence and brain-computer interfaces (BCIs). Can you explain how understanding top-down processing might contribute to these fields?

Dr. Sharma: Current AI systems are largely based on feedforward models, mimicking the customary view of brain function. By incorporating feedback mechanisms and dynamic learning algorithms, we could create AI systems that are more flexible, adaptable, and capable of handling complex, real-world tasks.